Parallel Programming with Swift

Learn Concurrency, Parallelism and Dispatch Queues, Target Queue, Dispatch Group, Barrier, Work Item, Semaphore & Dispatch Source, Operation and OperationQueue, Block Operation, Asynchronous Operation and passing data between operations in this article:

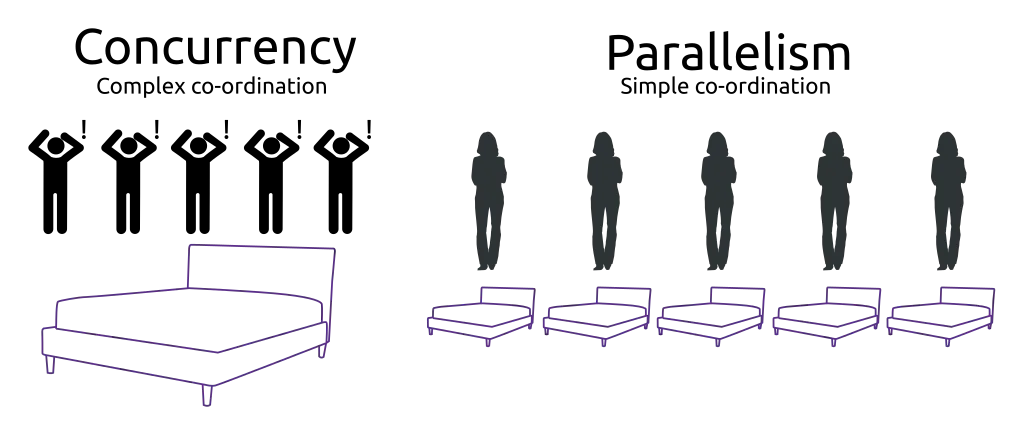

Whenever we hear parallel programming, two confusing terms comes in mind: Concurrency and Parallelism. Let’s see first there role in real life:

Concurrency: Our day-to-day work with multitasking. Many times we do multitasking which ends up in one task at the same time but ideally, that is context switching. It has some limitations also. Let’s say we have 100s of work to do daily, but we can’t increase this to 1000’s of work.

Parallelism: If we could get more physical things, then two or more work can be done at the same time. If I get 4 arms, then this article can be finished in half of the time.

Let’s have a look at both terms in the computer world:

Concurrency:

Concurrency means that an application is making progress on more than one task at the same time (concurrently). If the computer only has one CPU, then the application may not make progress on more than one task at exactly the same time, but more than one task is being processed at a time inside the application. It doesn’t completely finish one task before it begins the next.

Concurrency is essentially applicable when we talk about minimum two tasks or more. When an application is capable of executing two tasks virtually at the same time, we call it a concurrent application. Though here tasks run looks like simultaneously, essentially they may not. They take advantage of CPU time-slicing feature of the operating system where each task runs part of its task and then go to waiting state.

When first task is in waiting state, CPU is assigned the second task to complete its part of the task. The operating system works based on the priority of tasks, thus, assigns CPU and other computing resources e.g. memory; turn by turn to all tasks and give them chance to complete. To end user, it seems that all tasks are running in parallel.

Complexity in Concurrency

Let’s assume 5 friends have moved into a house and each has a bed to make. Which is the more complex way to structure this?

- 5 people assembling one bed at the same time Or

- each person assembling their own bed

Think about how to write instructions for several of your friends on how to assemble a bed together, without holding each other up or having to wait for tools. They would need to coordinate their actions at the right time to assemble the parts of a bed into a finished bed. Those instructions would be really complicated, hard to write and probably hard to read, too.

With each person building their own bed, the instructions are very simple and no one has to wait for other people to finish or for tools to be available.

There is a talk by Rob Pike on Concurrency is not Parallelism.

Parallelism

Parallelism doesn’t require two tasks to exist. It physically runs parts of tasks or multiple tasks, at the same time using the multi-core infrastructure of CPU, by assigning one core to each task or sub-task. Parallelism requires hardware with multiple processing units, essentially. In single core CPU, you may get concurrency but not parallelism.

Concurrency is the composition of independently executing processes, while parallelism is the simultaneous execution of computations. Parallelism means that an application splits its tasks up into smaller subtasks which can be processed in parallel, for instance on multiple CPUs at the exact same time.

Concurrency is about dealing with lot of things at once, it’s more focus is on structure. Parallelism is about doing lot of things at once , it’s focus is on execution.

- An application can be concurrent — but not parallel, which means that it processes more than one task at the same time, but two tasks are not executing at same time instant.

- An application can be parallel — but not concurrent, which means that it processes multiple sub-tasks of a task in multi-core CPU at same time.

- An application can be neither parallel — nor concurrent, which means that it processes all tasks one at a time, sequentially.

- An application can be both parallel — and concurrent, which means that it processes multiple tasks concurrently in multi-core CPU at same time.

One of the greatest improvements in the technology of CPUs since their existence is the capability to contain multiple cores and therefore to run multiple threads, which means to serve more than one task at any given moment. In iOS, there are 2 ways to achieve concurrency: Grand Central Dispatch and OperationQueue.

Grand Central Dispatch

- Synchronous and Asynchronous Execution

- Serial and Concurrent Queues

- System-Provided Queues

- Custom Queues

Grand Central Dispatch:

Grand Central Dispatch (GCD) is a queue based API that allows to execute closures on workers pools in the First-in First-out order. The completion order will depend on the duration of each job.

A dispatch queue executes tasks either serially or concurrently but always in a FIFO order. An application can submit a task to queue in the form of blocks either synchronously or asynchronously. Dispatch queue executes this block on a thread pool provided by the system. There is no guarantee on which thread submitted task will execute.

The GCD API had a few changes in Swift 3, SE-0088 modernized its design and made it more object oriented.

This framework facilitates to execute code concurrently on the multi-core system by submitting a task to dispatch queues managed by the system.

Synchronous and Asynchronous Execution

Each task or work item can be executed either synchronously or asynchronously. In case of synchronous, it will wait for the first task to finish before starting the next task and so on. When a task is executed asynchronously the method call returns immediately and the next task starts making progress.

Serial and Concurrent Queues

A dispatch queue can be either serial, so that work items are executed one at a time, or it can be concurrent so that work items are dequeued in order, but run all at once and can finish in any order. Both serial and concurrent queues process work items in first in, first-out (FIFO) order.

Serial Queue

Let’s have a look at an example of dispatching a task to the main queue asynchronously.

import Foundation

var value: Int = 2

DispatchQueue.main.async {

for i in 0...3 {

value = i

print("\(value) ✴️")

}

}

for i in 4...6 {

value = i

print("\(value) ✡️")

}

DispatchQueue.main.async {

value = 9

print(value)

}

As this task is executing asynchronously so initially for loop on current queue get executed, then task inside dispatch queue and then another task.

While doing this experiment, I came to know that we can’t use DispatchQueue.main.sync

Attempting to synchronously execute a work item on the main queue results in dead-lock.

Do not call the dispatch_sync function from a task that is executing on the same queue that you pass to your function call. Doing so will deadlock the queue. If you need to dispatch to the current queue, do so asynchronously using the dispatch_async function.

— Apple Documentation

Given that the main thread is a serial queue (which means it uses only one thread), the following statement:

DispatchQueue.main.sync {}

will cause the following events:

- sync queues the block in the main queue.

- sync blocks the thread of the main queue until the block finishes executing.

- sync waits forever because the thread where the block is supposed to run is blocked.

The key to understanding this is that DispatchQueue.main.sync does not execute blocks, it only queues them. Execution will happen on a future iteration of the run loop.

As an optimization, this function invokes the block on the current thread when possible.

Concurrent Queue:

Global Concurrent Queue can be get by passing QoS to this method:

DispatchQueue.global(qos: .default)

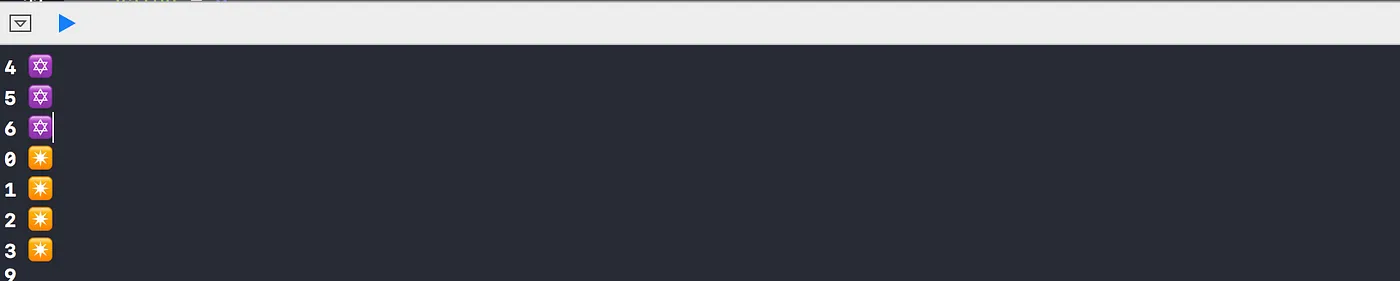

System-Provided Queues

When an app launches, the system automatically creates a special queue called the main queue. Work items enqueued to the main queue execute serially on your app’s main thread. Main queue can be accessed using DispatchQueue.main

Apart from main queue, system provides several global concurrent queues. When sending tasks to the global concurrent queues, you specify a Quality of Service (QoS) class property.

Primary QoS:

- User-interactive: This represents tasks that must complete immediately in order to provide a nice user experience. Use it for UI updates, event handling and small workloads. The total amount of work done in this class during the execution of your app should be small. This should run on the main thread.

- User-initiated: The user initiates these asynchronous tasks from the UI. Use them when the user is waiting for immediate results and for tasks required to continue user interaction. They execute in the high priority global queue.

- Utility: This represents long-running tasks. Use it for computations, I/O, networking, continuous data feeds and similar tasks. This class is designed to be energy efficient. Utility tasks typically have a progress bar that is visible to the user. This will get mapped into the low priority global queue. Work to be performed takes a few seconds to a few minutes.

- Background: This represents tasks that the user is not directly aware of such as prefetching, backup. This will get mapped into the background priority global queue. It’s useful for work that takes significant time, such as minutes or hours.

Special QoS:

- Default: The priority level of this QoS falls between user-initiated and utility. Work that has no QoS information assigned is treated as default, and the GCD global queue runs at this level.

- Unspecified: This represents the absence of QoS information and cues the system that an environmental QoS should be inferred. Threads can have an unspecified QoS if they use legacy APIs that may opt the thread out of QoS.

Global Concurrent Queues:

In past, GCD has provided high, default, low, and background global concurrent queues for prioritizing work. Corresponding QoS classes should now be used in place of these queues.

Custom Queues

GCD provides 3 types of queues i.e. main queue, global queue and custom queues.

There are three init methods available to us when creating our own queues:

- init(“queueName”)

- init(“queueName”, attributes: {attributes})

- init(“queueName”, qos: {QoS class}, attributes: {attributes}, autoReleaseFrequency: {autoreleaseFrequency}, target: {queue})

The first initializer will implicitly create a serial queue.

attributes mentioned in 2nd and 3rd initializer refers to DispatchQueue.Attributes, an option set with two options: .concurrent, which we can use to create a concurrent queue, and .initiallyInactive, which allows us to create inactive queues. Inactive queues can be modified until they’re not active and will not actually begin executing items in their queue until they’re activated with a call to activate() . autoReleaseFrequency refers to DispatchQueue.AutoreleaseFrequency.

-

.inherit: Dispatch queues with this autorelease frequency inherit the behavior from their target queue. This is the default behavior for manually created queues.

-

.workItem: Dispatch queues with this autorelease frequency push and pop an autorelease pool around the execution of every block that was submitted to it asynchronously. When a queue uses the per-workitem autorelease frequency (either directly or inherited from its target queue), any block submitted asynchronously to this queue (via async(), .barrier, .notify(), etc…) is executed as if surrounded by a individual autoreleasepool. Autorelease frequency has no effect on blocks that are submitted synchronously to a queue (via sync(), .barrier).

-

.never: Dispatch queues with this autorelease frequency never set up an individual autorelease pool around the execution of a block that is submitted to it asynchronously. This is the behavior of the global concurrent queues.

Let’s see how these queues work synchronously or asynchronously:

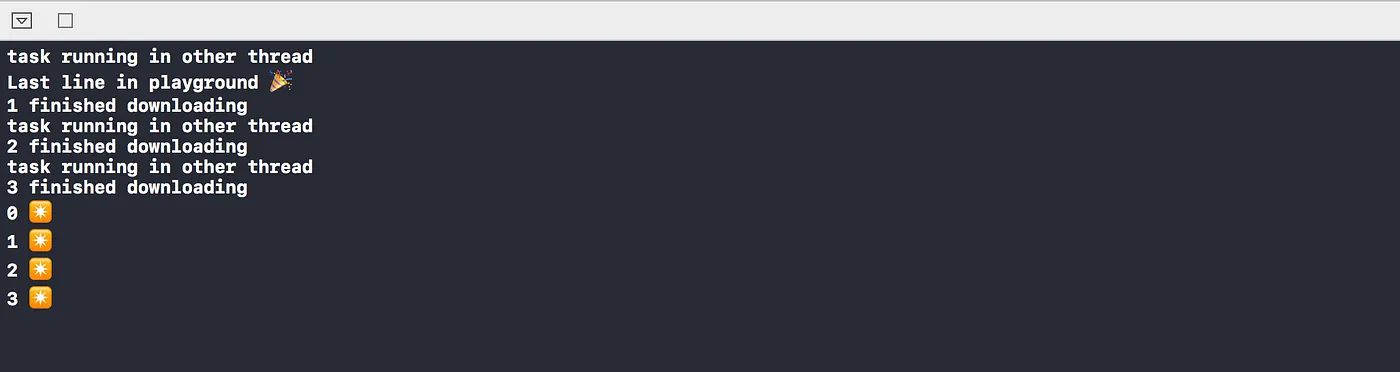

- Serial Queue executing task asynchronously

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

var value: Int = 20

let serialQueue = DispatchQueue(label: "com.queue.Serial")

func doAsyncTaskInSerialQueue() {

for i in 1...3 {

serialQueue.async {

if Thread.isMainThread{

print("task running in main thread")

}else{

print("task running in other thread")

}

let imageURL = URL(string: "https://upload.wikimedia.org/wikipedia/commons/0/07/Huge_ball_at_Vilnius_center.jpg")!

let _ = try! Data(contentsOf: imageURL)

print("\(i) finished downloading")

}

}

}

doAsyncTaskInSerialQueue()

serialQueue.async {

for i in 0...3 {

value = i

print("\(value) ✴️")

}

}

print("Last line in playground 🎉")

Task will run in a different thread(other than main thread) on using async in GCD. Async means execute next line, don’t wait until the block executes which results in non-blocking main thread & main queue. Since its serial queue, all tasks are executed in the order they are added to the serial queue. Tasks added serially are always executed one at a time by the single thread associated with the Queue.

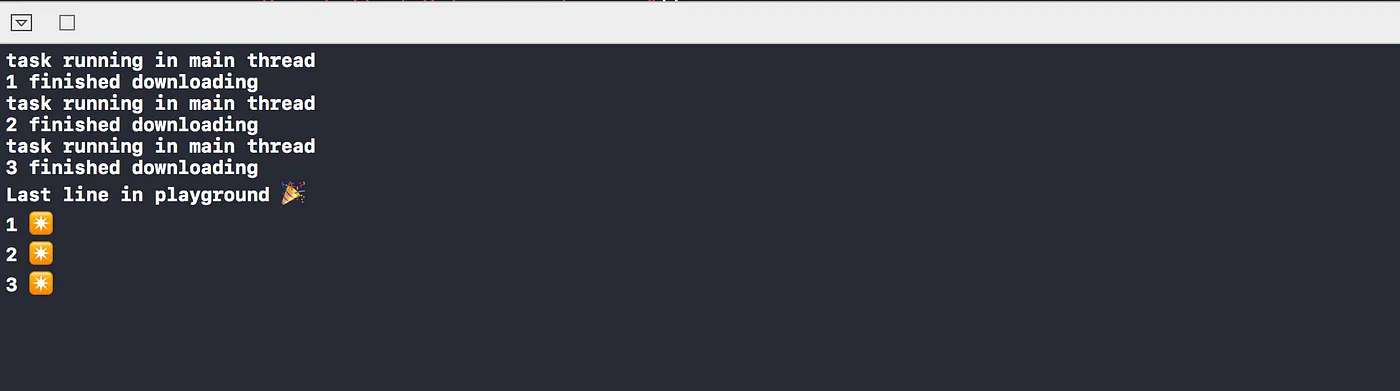

- Serial Queue executing task synchronously

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

var value: Int = 20

let serialQueue = DispatchQueue(label: "com.queue.Serial")

func doSyncTaskInSerialQueue() {

for i in 1...3 {

serialQueue.sync {

if Thread.isMainThread{

print("task running in main thread")

}else{

print("task running in other thread")

}

let imageURL = URL(string: "https://upload.wikimedia.org/wikipedia/commons/0/07/Huge_ball_at_Vilnius_center.jpg")!

let _ = try! Data(contentsOf: imageURL)

print("\(i) finished downloading")

}

}

}

doSyncTaskInSerialQueue()

serialQueue.async {

for i in 0...3 {

value = i

print("\(value) ✴️")

}

}

print("Last line in playground 🎉")

Task may run in the main thread when you use sync in GCD. Sync runs a block on a given queue and waits for it to complete which results in blocking main thread or main queue. Since the main queue needs to wait until the dispatched block completes, the main thread will be available to process blocks from queues other than the main queue. Therefore there is a chance of the code executing on the background queue may actually be executing on the main thread Since it’s serial queue, all are executed in the order they are added(FIFO).

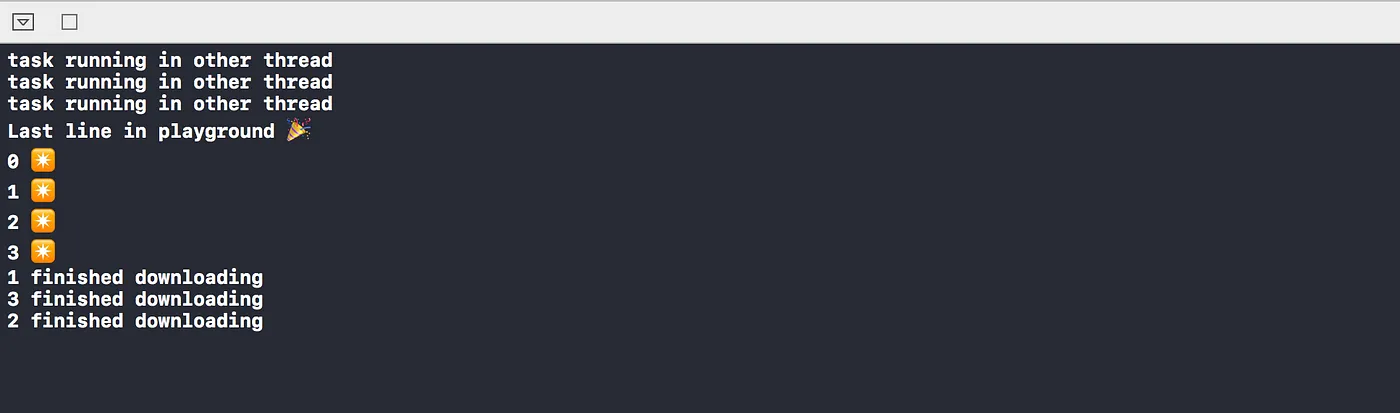

- Concurrent Queue executing task asynchronously

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

var value: Int = 20

let concurrentQueue = DispatchQueue(label: "com.queue.Concurrent", attributes: .concurrent)

func doAsyncTaskInConcurrentQueue() {

for i in 1...3 {

concurrentQueue.async {

if Thread.isMainThread{

print("task running in main thread")

}else{

print("task running in other thread")

}

let imageURL = URL(string: "https://upload.wikimedia.org/wikipedia/commons/0/07/Huge_ball_at_Vilnius_center.jpg")!

let _ = try! Data(contentsOf: imageURL)

print("\(i) finished downloading")

}

}

}

doAsyncTaskInConcurrentQueue()

concurrentQueue.async {

for i in 0...3 {

value = i

print("\(value) ✴️")

}

}

print("Last line in playground 🎉")

Task runs in other thread when you use async in GCD. Async means execute next line, don’t wait until the block executes which results in non-blocking main thread. As in concurrent queue, task are processed in the order they are added to queue but with different threads attached to the queue. They are not supposed to finish the task in the order they are added to the queue. Order of task differs each time as threads are handled and assigned by the system. All tasks get executed in parallel.

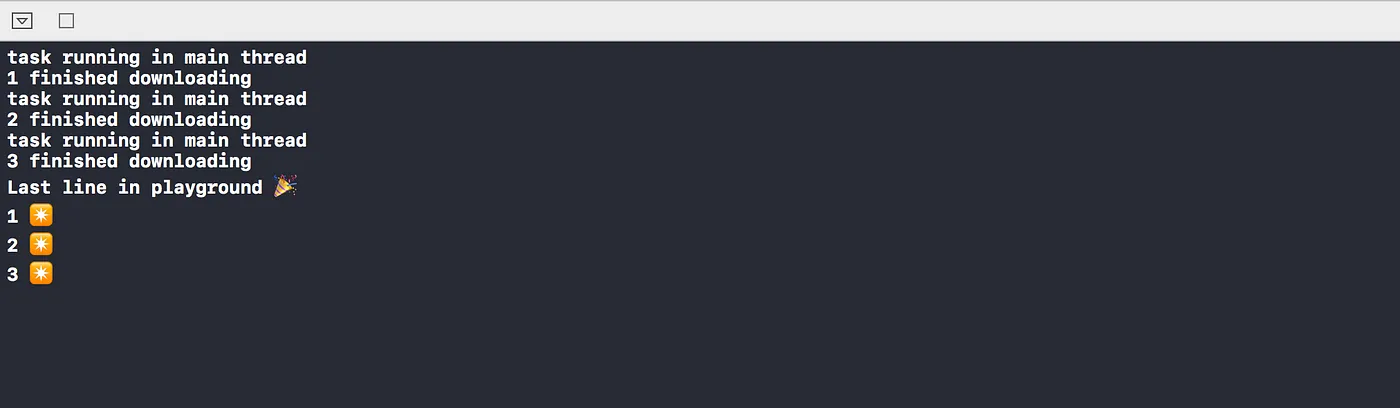

- Concurrent Queue executing task synchronously

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

var value: Int = 20

let concurrentQueue = DispatchQueue(label: "com.queue.Concurrent", attributes: .concurrent)

func doSyncTaskInConcurrentQueueQueue() {

for i in 1...3 {

concurrentQueue.sync {

if Thread.isMainThread{

print("task running in main thread")

}else{

print("task running in other thread")

}

let imageURL = URL(string: "https://upload.wikimedia.org/wikipedia/commons/0/07/Huge_ball_at_Vilnius_center.jpg")!

let _ = try! Data(contentsOf: imageURL)

print("\(i) finished downloading")

}

}

}

doSyncTaskInConcurrentQueueQueue()

concurrentQueue.async {

for i in 1...3 {

value = i

print("\(value) ✴️")

}

}

print("Last line in playground 🎉")

Task may run in the main thread when you use sync in GCD. Sync runs a block on a given queue and waits for it to complete which results in blocking main thread or main queue. Since the main queue needs to wait until the dispatched block completes, the main thread will be available to process blocks from queues other than the main queue. Therefore there is a chance of the code executing on the background queue may actually be executing on the main thread. Since its concurrent queue, tasks may not finish in the order they are added to the queue. But with synchronous operation it does, although they may be processed by different threads. So it behaves as this is the serial queue.

asyncAfter:

If you want to execute task on queue after some delay you can provide delay in asyncAfter() instead of using sleep()

References:

Concurrent vs serial queues in GCD - stackoverflow

Queues are not bound to any specific thread

1. Target Queue

A custom dispatch queue doesn’t execute any work, it just passes work to the target queue. By default, target queue of custom dispatch queue is a default-priority global queue. Since Swift 3, once a dispatch queue is activated, it cannot be mutated anymore. The target queue of a custom queue can be set by the setTarget(queue:) function. Setting a target on an activated queue will compile but then throw an error in runtime. Fortunately, DispatchQueue initializer accepts other arguments. If for whatever reason, you still need to set the target on an already created queue, you can do that by using the initiallyInactive attribute available since iOS 10.

DispatchQueue(label: "queue", attributes: .initiallyInactive)

That will allow you to modify it until you activate it.

activate() method of the DispatchQueue class will make the task to execute. As the as the queue hasn’t been marked as a concurrent one, they’ll run in a serial order. To make queue concurrent we need to specify:

DispatchQueue(label: "queue", attributes: [.initiallyInactive, .concurrent])

You can pass to target queue any other dispatch queue, even another custom queue, so long as you never create a cycle. This function can be used to set the priority of a custom queue by simply setting its target queue to a different global queue. Only the global concurrent queues and the main queue get to execute blocks. All other queues must (eventually) target one of these special queues.

You might have noticed target queue in Dispatch API or frameworks you use. I found the use of target queue in RxSwift library.

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

var value: Int = 2

let serialQueue = DispatchQueue(label: "serialQueue")

let concurrentQueue = DispatchQueue(label: "concurrentQueue", attributes: [.initiallyInactive, .concurrent])

concurrentQueue.setTarget(queue: serialQueue)

concurrentQueue.activate()

concurrentQueue.async {

for j in 0...4 {

value = j

print("\(value) ✡️")

}

}

concurrentQueue.async {

for j in 5...7 {

value = j

print("\(value) ✴️")

}

}

Target queue uses:

- If you set your custom queue's target to be the low priority global queue, all work on your custom queue will execute with low priority and the same with the high priority global queue.

- To target a custom queue to the main queue. This will cause all blocks submitted to that custom queue to run on the main thread. The advantage of doing this instead of simply using the main queue directly is that your custom queue can be independently suspended and resumed, and could potentially be retargeted onto a global queue afterward.

- To target custom queues for other custom queues. This will force multiple queues to be serialized with respect to each other, and essentially creates a group of queues which can all be suspended and resumed together by suspending/resuming the queue that they target.

Get Current Queue Name

API provides us a function to check whether a call is in the main thread or not. But there is no way to get current queue name. By using below snippet you can print current queue name anywhere.

extension DispatchQueue {

static var currentLabel: String? {

let name = __dispatch_queue_get_label(nil)

return String(cString: name, encoding: .utf8)

}

}

2. DispatchGroup

With dispatch groups we can group together multiple tasks and either wait for them to be completed or be notified once they are complete. Tasks can be asynchronous or synchronous and can even run on different queues. Dispatch groups are managed by DispatchGroup object.

Let’s consider a scenario: I have to download couple of images and I want to notify user when all images are downloaded.

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

let concurrentQueue = DispatchQueue(label: "com.queue.Concurrent", attributes: .concurrent)

func performAsyncTaskIntoConcurrentQueue(with completion: @escaping () -> ()) {

concurrentQueue.async {

for i in 1...5 {

if Thread.isMainThread {

print("\(i) task running in main thread")

} else{

print("\(i) task running in other thread")

}

concurrentQueue.async {

let imageURL = URL(string: "https://upload.wikimedia.org/wikipedia/commons/0/07/Huge_ball_at_Vilnius_center.jpg")!

let _ = try! Data(contentsOf: imageURL)

print("\(i) finished downloading")

}

}

DispatchQueue.main.async {

completion()

}

}

}

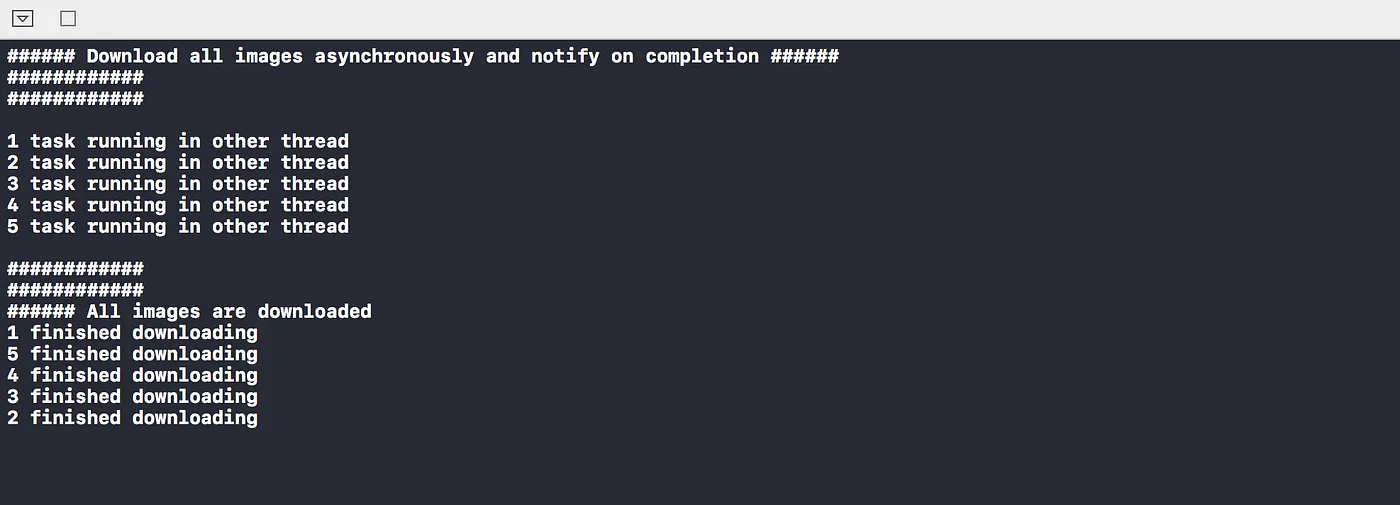

print("###### Download all images asynchronously and notify on completion ######")

print("############")

print("############\n")

performAsyncTaskIntoConcurrentQueue(with: {

print("\n############")

print("############")

print("###### All images are downloaded")

})

With this implementation it’s difficult to get notify on completion as tasks are executing asynchronously. Here DispatchGroup comes into picture:

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

let concurrentQueue = DispatchQueue(label: "com.queue.Concurrent", attributes: .concurrent)

func performAsyncTaskIntoConcurrentQueue(with completion: @escaping () -> ()) {

let group = DispatchGroup()

for i in 1...5 {

group.enter()

concurrentQueue.async {

let imageURL = URL(string: "https://upload.wikimedia.org/wikipedia/commons/0/07/Huge_ball_at_Vilnius_center.jpg")!

let _ = try! Data(contentsOf: imageURL)

print("###### Image \(i) Downloaded ######")

group.leave()

}

}

/* Either write below code or group.notify() to execute completion block

group.wait()

DispatchQueue.main.async {

completion()

}

*/

group.notify(queue: DispatchQueue.main) {

completion()

}

}

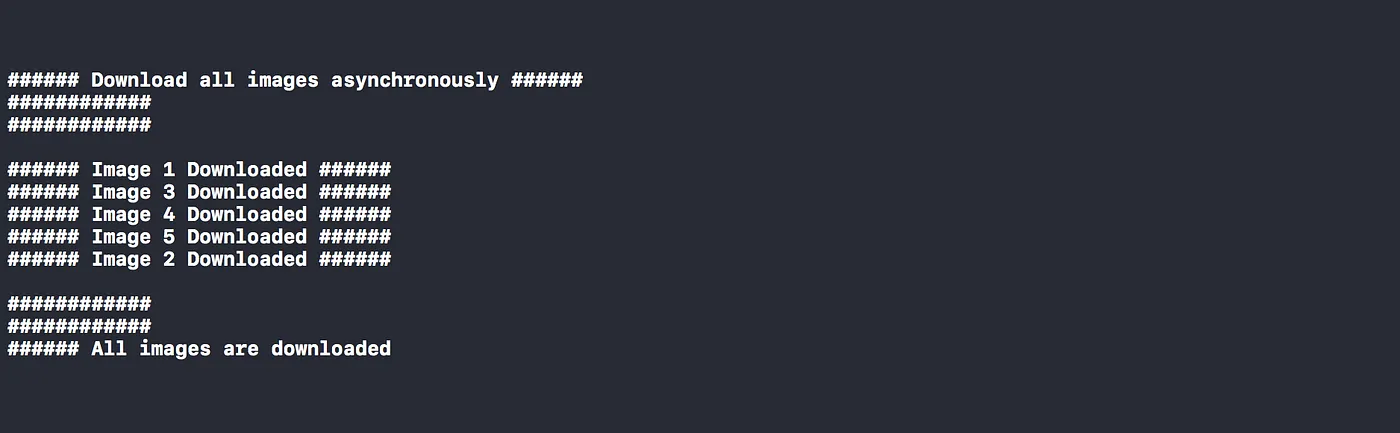

print("###### Download all images asynchronously and notify on completion ######")

print("############")

print("############\n")

performAsyncTaskIntoConcurrentQueue(with: {

print("\n############")

print("############")

print("###### All images are downloaded")

})

DispatchGroup allows for aggregate synchronization of work. It can be used to submit multiple different work items or blocks and track when they all complete, even though they might run on different queues. Only necessary thing is to make balanced calls to enter() and leave() on a dispatch group to have it synchronize our tasks. Call enter() to manually notify the group that a task has started and leave to notify that work has been done. You can call group.wait() too which blocks the current thread until the group’s tasks have completed.. There are two ways to call completion block:

- Using wait() and then execute completion block on main queue Or

- Call group notify()

- wait(timeout:). This blocks the current thread, but after the timeout specified, continues anyway. To create a timeout object of type DispatchTime, the syntax .now() + 1 will create a timeout one second from now.

- wait(timeout:) returns an enum that can be used to determine whether the group completed, or timed out.

Some other use cases:

- You need to run two distinct network calls. Only after they both have returned you have the necessary data to parse the responses.

- An animation is running, parallel to a long database call. Once both of those have finished, you’d like to hide a loading spinner. The network API you’re using is too quick. Now your pull to the refresh gesture doesn’t seem to be working, even though it is. The API call returns so quickly that the refresh control dismisses itself as soon as it has finished the appearance animation — which makes it like it’s not refreshing. To solve this, we can add a faux delay. i.e. we can wait for both some minimum time threshold, and the network call, before hiding the refresh control.

2. DispatchWorkItem:

One common misconception about GCD is that “once you schedule a task it can’t be cancelled, you need to use the Operation API for that”. With iOS 8 & macOS 10.10 DispatchWorkItem was introduced, which provides this exact functionality in a easy to use API.

DispatchWorkItem encapsulates work that can be performed. A work item can be dispatched onto a DispatchQueue and within a DispatchGroup. A DispatchWorkItem can also be set as a DispatchSource event, registration, or cancel handler.

In other words, A DispatchWorkItem encapsulates block of code that can be dispatched to any queue.

A dispatch work item has a cancel flag. If it is cancelled before running, the dispatch queue won’t execute it and will skip it. If it is cancelled during its execution, the cancel property return true. In that case, we can abort the execution. Also work items can notify a queue when their task is completed.

Note: GCD doesn’t perform preemptive cancelations. To stop a work item that has already started, you have to test for cancelations yourself.

If you don’t want instant execution of Work Item, not an issue, wait function is there to help you out. We can pass some time interval for the delay in execution.

OOPS, there are two types of wait function in DispatchWorkItem. Which one to use? Confused?

func wait(timeout: DispatchTime) -> DispatchTimeoutResult

func wait(wallTimeout: DispatchWallTime) -> DispatchTimeoutResult

DispatchTime is basically the time according to device clock and if the device goes to sleep, the clock sleeps too. A perfect couple.

But DispatchWallTime is the time according to wall clock, who doesn’t sleep at all, A Night Watch.

After the execution, we can notify the same queue or other queue by calling func notify(qos: DispatchQoS = default, flags: DispatchWorkItemFlags = default, queue: DispatchQueue, execute: @escaping () -> Void)

DispatchQoS class works with DispatchQueue, It helps in categorization of the tasks according to their importance. The task with highest priority will execute first as the system assigns more resources for faster execution. But the task with lower priority will execute later as it requires less number of resources and energy. It helps the application to become more responsive and energy efficient.

DispatchWorkItemFlags is basically a set of unique options that can customize the behaviour of DispatchWorkItem. Depending on the flag value you provided, it decides whether to create a new thread or need to create a barrier.

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

let concurrentQueue = DispatchQueue(label: "com.queue.Concurrent", attributes: .concurrent)

func performAsyncTaskInConcurrentQueue() {

var task:DispatchWorkItem?

task = DispatchWorkItem {

for i in 1...5 {

if Thread.isMainThread {

print("task running in main thread")

} else{

print("task running in other thread")

}

if (task?.isCancelled)! {

break

}

let imageURL = URL(string: "https://upload.wikimedia.org/wikipedia/commons/0/07/Huge_ball_at_Vilnius_center.jpg")!

let _ = try! Data(contentsOf: imageURL)

print("\(i) finished downloading")

}

task = nil

}

/*

There are two ways to execute task on queue. Either by providing task to execute parameter or

within async block call perform() on task. perform() executes task on current queue.

*/

// concurrentQueue.async(execute: task!)

concurrentQueue.async {

task?.wait(wallTimeout: .now() + .seconds(2))

// task?.wait(timeout: .now() + .seconds(2))

task?.perform()

}

concurrentQueue.asyncAfter(deadline: .now() + .seconds(2), execute: {

task?.cancel()

})

task?.notify(queue: concurrentQueue) {

print("\n############")

print("############")

print("###### Work Item Completed")

}

}

performAsyncTaskInConcurrentQueue()

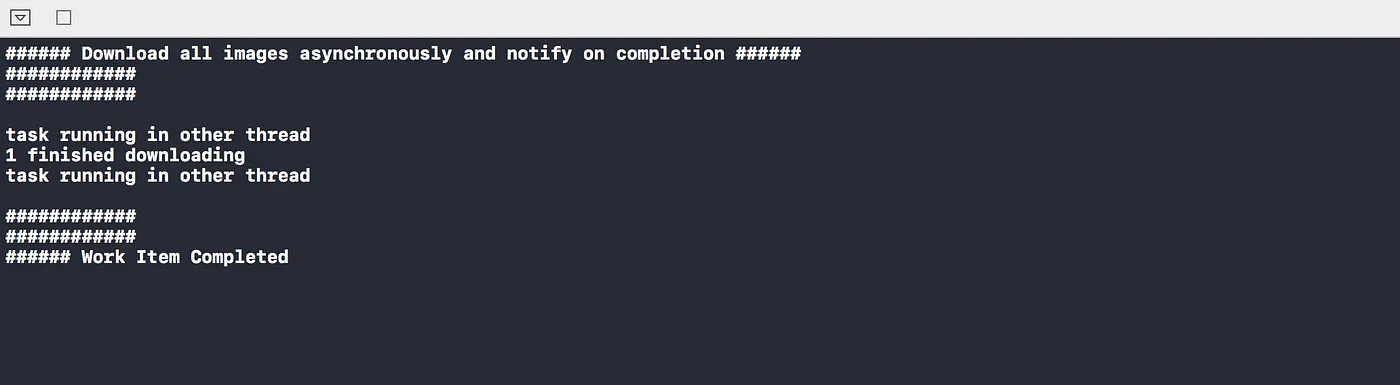

print("###### Download all images asynchronously and notify on completion ######")

print("############")

print("############\n")

One queue is cancelling task after 2 seconds which interrupts image download task as we perform check for isCancelled in for loop.

3. DispatchBarrier

One frequent concern with singletons is that often they’re not thread safe as singletons are often used from multiple controllers accessing the singleton instance at the same time. Thread safe code can be safely called from concurrent tasks without causing any problems such as data corruption. Code that is not thread safe can only run in one context at a time.

There are two thread safety cases to consider: during initialization of the singleton instance and during reads and writes to the instance.

Initialization turns out to be the easy case because of how Swift initializes static variables. It initializes static variables when they are first accessed, and it guarantees initialization is atomic.

A critical section is a piece of code that must not execute concurrently, that is, from two threads at once. This is usually because the code manipulates a shared resource such as a variable that can become corrupt if it’s accessed by concurrent processes.

When submitted to a a global queue or to a queue not created with the .concurrent attribute, barrier blocks behave identically to *blocks submitted with the async()/sync() API.

Let’s take a problem:

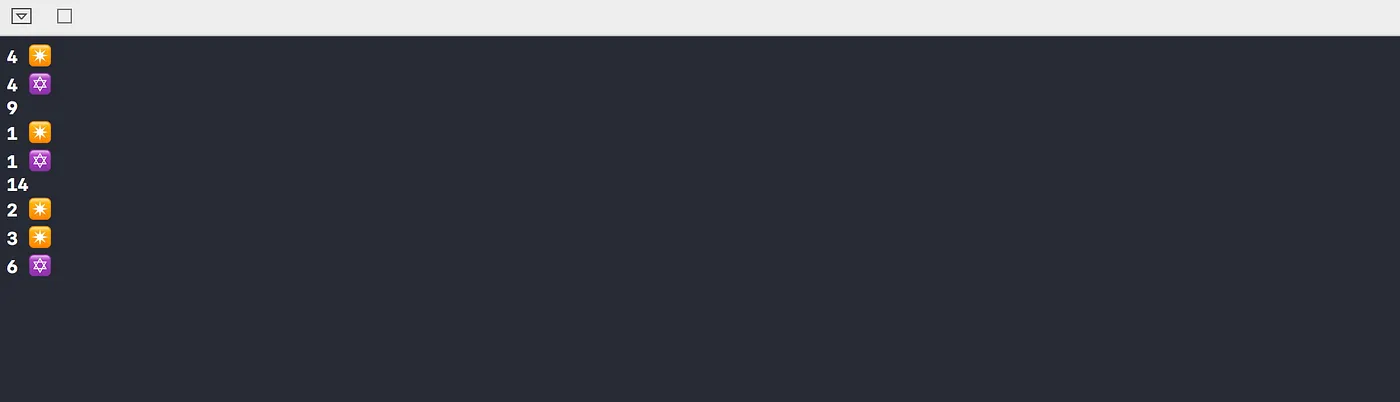

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

var value: Int = 2

let concurrentQueue = DispatchQueue(label: "queue", attributes: .concurrent)

concurrentQueue.async {

for i in 0...3 {

value = i

print("\(value) ✴️")

}

}

concurrentQueue.async {

for j in 4...6 {

value = j

print("\(value) ✡️")

}

}

concurrentQueue.async {

value = 9

print(value)

}

concurrentQueue.async {

value = 14

print(value)

}

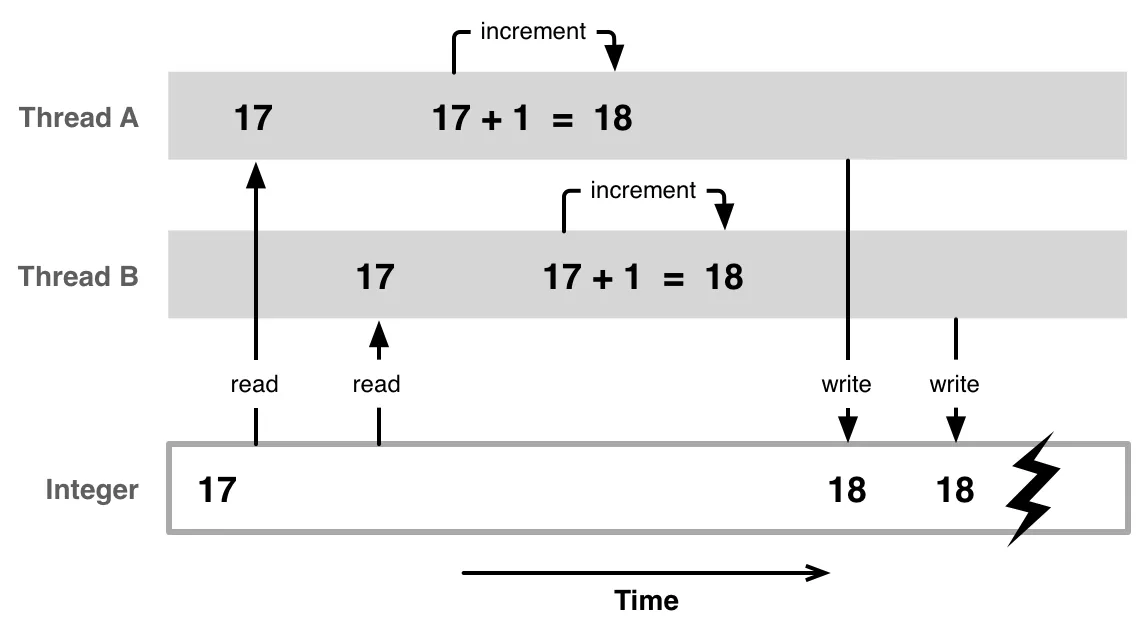

In this example, multiple threads trying to manipulate value variable at the same time and because of that wrong value is getting printed.

This is a race condition or classic readers writers problem where blocks on many queues are trying to modify mutable value. As a result of this it’s printing wrong value in block. Swift provides an elegant solution creating a read/write lock using DispatchBarrier.

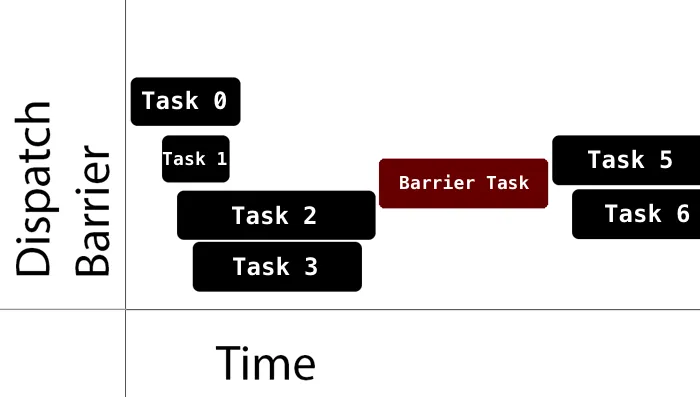

A dispatch barrier allows us to create a synchronization point within a concurrent dispatch queue. In normal operation, the queue acts just like a normal concurrent queue. But when the barrier is executing, it acts as a serial queue. After the barrier finishes, the queue goes back to being a normal concurrent queue.

GCD takes note of which blocks of code are submitted to the queue before barrier call, and when they all have completed it will call the passed in barrier block. Also, any further blocks that are submitted to the queue will not be executed until after the barrier block has completed. The barrier call, however, returns immediately and execute this block asynchronously.

Technically when we submit a DispatchWorkItem or block to a dispatch queue, we set a flag to indicate that it should be the only item executed on the specified queue for that particular time. All items submitted to the queue prior to the dispatch barrier must complete before this DispatchWorkItem will execute. When the barrier is executed it is the only one task being executed and the queue does not execute any other tasks during that time. Once a barrier is finished, the queue returns to its default behavior.

If the queue is a serial queue or one of the global concurrent queues, the barrier would not work. Using barriers in a custom concurrent queue is a good choice for handling thread safety in critical areas of code.

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

var value: Int = 2

let concurrentQueue = DispatchQueue(label: "queue", attributes: .concurrent)

concurrentQueue.async(flags: .barrier) {

for i in 0...3 {

value = i

print("\(value) ✴️")

}

}

concurrentQueue.async {

print(value)

}

concurrentQueue.async(flags: .barrier) {

for j in 4...6 {

value = j

print("\(value) ✡️")

}

}

concurrentQueue.async {

value = 14

print(value)

}

4. DispatchSemaphore

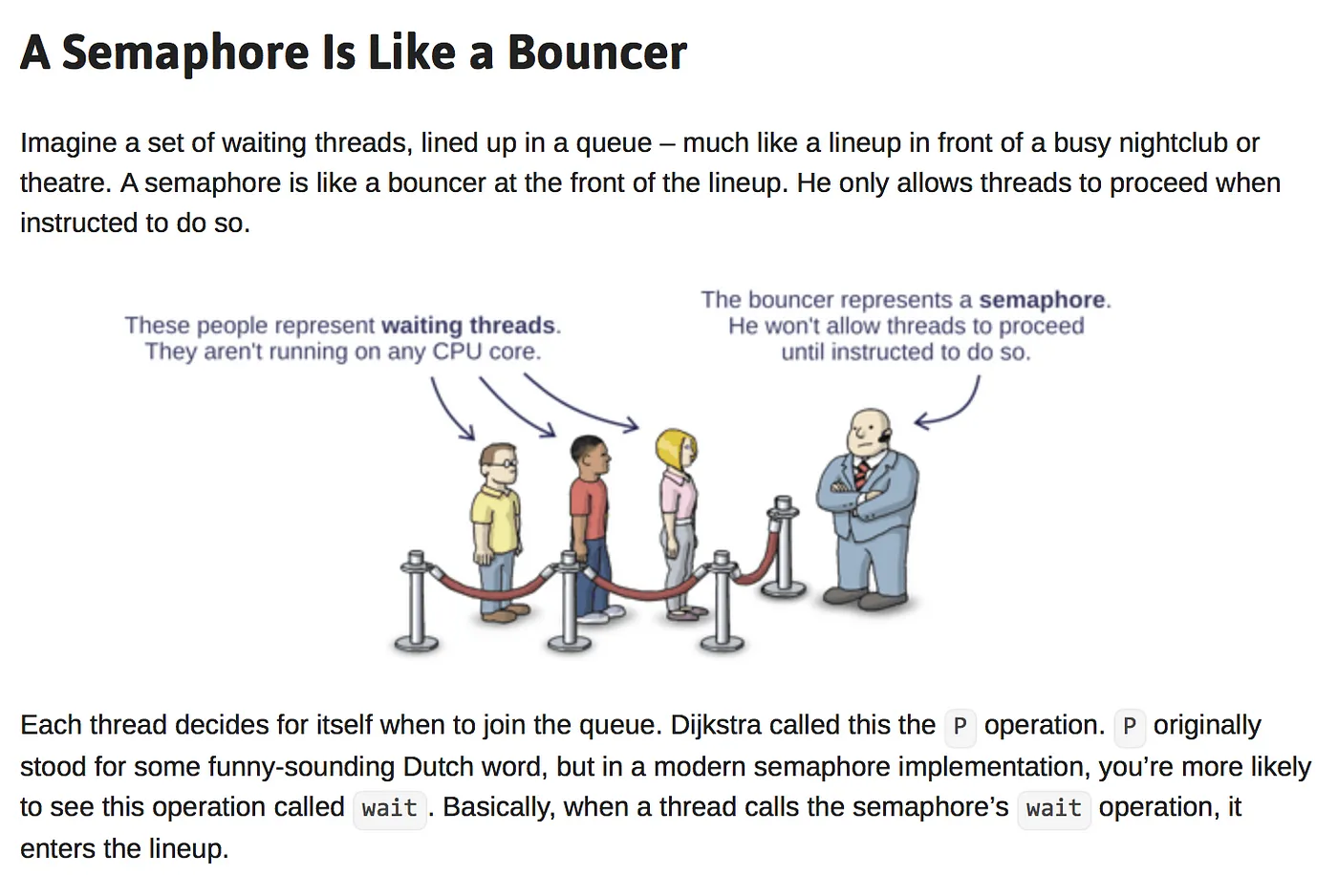

In multithreaded programming, it’s important to make threads wait. They must wait for exclusive access to a resource. One way to make threads wait and put them to sleep inside the kernel so that they no longer take any CPU time — is with a semaphore. Semaphores were invented by Dijkstra back in the early 1960s.

Semaphores gives us the ability to control access to a shared resource by multiple threads. A shared resource can be a variable, or a task such as downloading an image from url, reading from a database etc.

A semaphore consists of a threads queue and a counter value (of type Int).

Threads queue is used by the semaphore to keep track on waiting threads in FIFO order.

Counter value is used by the semaphore to decide if a thread should get access to a shared resource or not. The counter value changes when we call signal() or wait() functions.

Request the shared resource:

Call wait() each time before using the shared resource. We are basically asking the semaphore if the shared resource is available or not. If not, we will wait.

Release the shared resource

Call signal() each time after using the shared resource. We are basically signaling the semaphore that we are done interacting with the shared resource.

Calling wait() perform the following work:

- Decrement semaphore counter by 1.

- If the resulting value is less than zero, thread is blocked and will go into waiting state.

- If the resulting value is equal or bigger than zero, code will get executed without waiting.

Calling signal() perform the following work:

- Increment semaphore counter by 1.

- If the previous value was less than zero, this function unblock the thread currently waiting in the thread queue.

- If the previous value is equal or bigger than zero, it means thread queue is empty, no one is waiting.

This below image shows perfect example of Semaphore.

Let’s implement Semaphore in a single queue Swift

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

var value: Int = 2

let concurrentQueue = DispatchQueue(label: "queue", attributes: .concurrent)

let semaphore = DispatchSemaphore(value: 1)

for j in 0...4 {

concurrentQueue.async {

print("\(j) waiting")

semaphore.wait()

print("\(j) wait finished")

value = j

print("\(value) ✡️")

print("\(j) Done with assignment")

semaphore.signal()

}

}

If you noticed in above code, first wait() and then signal() is called on Semaphore.

Let’s take another example of using Semaphore while downloading image.

Now that we understand how semaphores work, let’s go over a scenario that is more realistic for an app, and that is, downloading 6 images from a url.

First we create a concurrent queue that will be used for executing our image downloading blocks of code.

Second, we create a semaphore and we set it with initial counter value of 2, as we decided to download 2images at a time in order not to take too much CPU time at once.

Third, we iterate 6 times using a for loop. On each iteration we do the following: wait() → download image → signal()

import Foundation

import PlaygroundSupport

PlaygroundPage.current.needsIndefiniteExecution = true

let concurrentQueue = DispatchQueue(label: "com.queue.Concurrent", attributes: .concurrent)

let semaphore = DispatchSemaphore(value: 2)

func performAsyncTaskIntoConcurrentQueue() {

for i in 1...6 {

concurrentQueue.async {

print("###### Image \(i) waiting for download ######")

semaphore.wait()

print("###### Downloading Image \(i) ######")

let imageURL = URL(string: "https://upload.wikimedia.org/wikipedia/commons/0/07/Huge_ball_at_Vilnius_center.jpg")!

let _ = try! Data(contentsOf: imageURL)

print("###### Image \(i) Downloaded ######")

semaphore.signal()

}

}

}

print("###### Download all images asynchronously ######")

print("############")

print("############\n")

performAsyncTaskIntoConcurrentQueue()

Let’s track the semaphore counter for a better understanding:

- 2 (Our initial value)

- 1 (Image 1 wait, since value >= 0, start image download)

- 0 (image 2 wait, since value >= 0, start image download)

- -1 (image 3 wait, since value < 0, add to queue)

- -2 (image 4 wait, since value < 0, add to queue)

- -3 (image 5 wait, since value < 0, add to queue)

- -4 (image 6 wait, since value < 0, add to queue)

- -3 (image 1 signal, last value < 0, wake up image 3 and pop it from queue)

-

- 2 (image 2 signal, last value < 0, wake up image 4 and pop it from queue)

- -1 (image 3 signal, last value < 0, wake up image 5 and pop it from queue)

- 0 (image 4 signal, last value < 0, wake up image 6 and pop it from queue)

From this sequence, you can see when one thread starts executing the sequence, the other thread must wait until the first one ends. It doesn’t matter at which point of the sequence the second thread will send the wait() request, it will always have to wait until the other thread is done.

It’s better to use Semaphores only among threads of same priority else it could ended up into Priority Inversion problem.

DispatchSources

Dispatch Sources are a convenient way to handle system level asynchronous events like kernel signals or system, file and socket related events using event handlers.

Dispatch sources can be used to monitor the following types of system events:

- Timer Dispatch Sources: Used to generate periodic notifications (DispatchSourceTimer).

- Signal Dispatch Sources: Used to handle UNIX signals (DispatchSourceSignal).

- Memory Dispatch Sources: Used to register for notifications related to the memory usage status (DispatchSourceMemoryPressure).

- Descriptor Dispatch Sources: Descriptor sources sends notifications related to a various file- and socket-based operations, such as:

- signal when data is available for reading;

- signal when it is possible to write data;

- files delete, move, or rename;

- files meta information change.

(DispatchSourceFileSystemObject, DispatchSourceRead, DispatchSourceWrite).

This enables us to easily build developer tools that have “live editing” features.

- Process dispatch sources: Used to monitor external process for some events related to their execution state (DispatchSourceProcess). Process-related events, such as

- a process exits;

- a process issues a fork or exec type of call;

- a signal is delivered to the process.

- Mach related dispatch sources: Used to handle events related to the IPC facilities of the Mach kernel (DispatchSourceMachReceive, DispatchSourceMachSend).

- Custom events that we trigger: You can define custom dispatch source by conforming to DispatchSourceProtocol

Let’s see some use cases:

- NSTimer` runs on main thread which needs main run loop to execute. If you want to execute NSTimer on background thread, you can’t. In this situation DispatchSourceTimer could be used. A dispatch timer source, fires an event when the time interval has been completed, which then fires a pre-set callback all on the same queue. Read more….

- If you have to monitor your files for changes there’s an easy way to do it using DispatchSource Descriptor.

Note:

Once a dispatch task has started running, neither cancelling or suspending the task/queue/work item will stop it. Cancelling and suspending operations only effect tasks that haven’t yet been called. If you must do this, you will need to manually check the cancelled state at appropriate moments during the task’s execution, and exit the task if needed.

References:

A Quick Look at Semaphores in Swift

Grand Central Dispatch Tutorial for Swift 5: Part 2/2

A deep dive into Grand Central Dispatch in Swift

Concurrency, (a)synchronicity and background processing

Operations:

Operation is an Object oriented way to encapsulate work that needs to be performed asynchronously.

Operation represents a single unit of work. It’s an abstract class that offers a useful, thread-safe structure for modeling state, priority, dependencies and management.

— NSHipster

As operation is an abstract class, you don’t use it directly. Foundation provides two system-defined sub classes InvocationOperation and BlockOperation to execute task. As being said with defined sub classes you just have to focus on the actual implementation of your task.

An operation executes task once and can’t be used to execute it again. You execute operations by adding them to an operation queue. An operation queue executes its operations either directly, by running them on secondary threads, or indirectly using the libdispatch library (i.e. Grand Central Dispatch). Thus Operation API is a higher level abstraction of Grand Central Dispatch.

You may be wondering: What’s task? 🤔

Let’s discuss these terms:

- Task: A single piece of work that needs to be done.

- Process: An executable chunk of code, which can be made up of multiple threads. Processes are the instances of your app. Whenever you perform cold launch of app, Process ID get change while it remain same when you perform hot launch. It contains everything needed to execute your app, this includes your stack, heap, and all other resources.

- Thread: A mechanism provided by the operating system that allows multiple sets of instructions to operate at the same time within a single application. Compared to processes, threads share their memory with their parent process. This can result in problems, such as having two threads changing a resource (e.g. a variable) at the same time. Threads are a limited resource on iOS. It’s limited to 64 threads at the same time for one process while it’s not mentioned officially in any document as it varies based on context and number of cores.

for element in 0..<30000 {

DispatchQueue.global().async {

print(element)

Thread.sleep(forTimeInterval: 10000)

}

}

Operation can be executed directly by calling a start() method. But it comes with it’s own overhead. Executing operations manually does put more of a burden on your code, because starting an operation that is not in the ready state triggers an exception. The isReady property reports on the operation’s readiness.

Since Operations are classes, we can use them to encapsulate our business logic.

Examples of tasks that can use Operation include network requests, image resizing, text processing, performing prefetching in background fetch or any other long-running task that produces associated state or data. It can be use to implement login sequence of application as well. An operation can have dependencies to other operations and that is a powerful feature Grand Central Dispatch lacks. If there is need to perform several tasks in a specific order, then operations are a good solution.

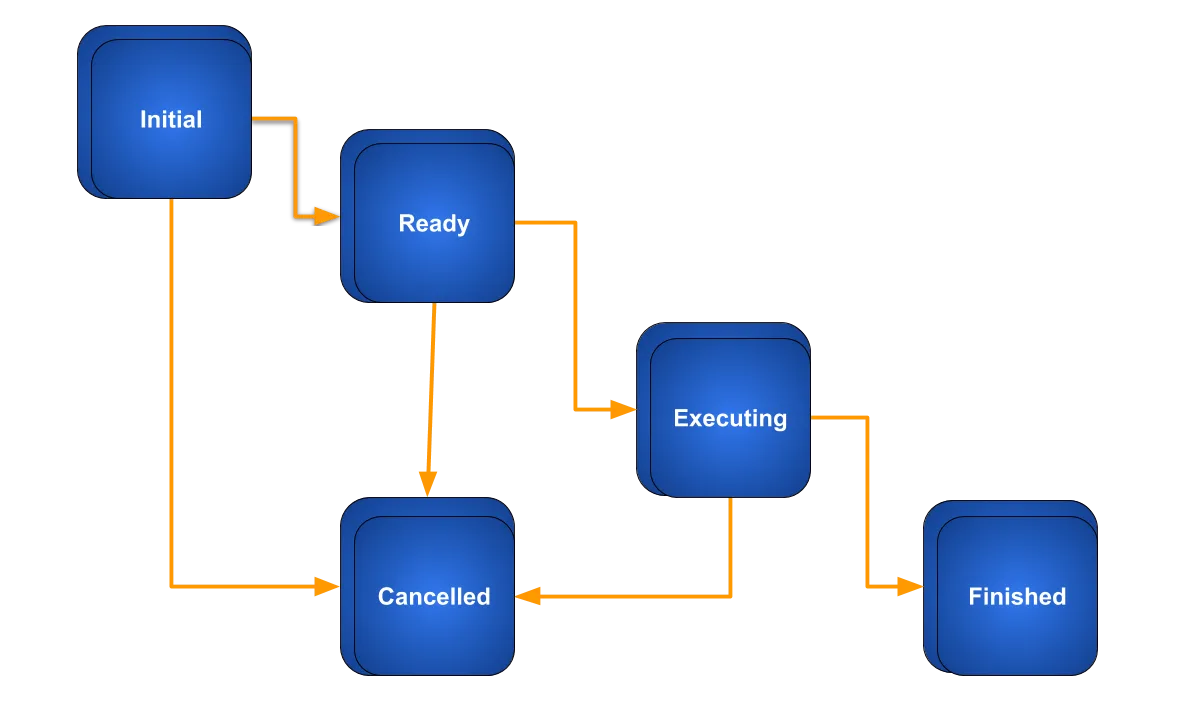

Operation Life Cycle:

Operation States:

Operation objects maintain states internally to determine when it is safe to execute and also to notify external clients of the progress through the operation’s life cycle.

isReady: It informs client when an operation is ready to execute. This keypath returns true when the operation is ready to execute now or false if there are still unfinished operations on which it is dependent.

isExecuting: This keypath tells us whether the operation is actively working on its assigned task. isExecuting returns true if the operation is working on its task or false if it is not.

isFinished: It informs that an operation finished its task successfully or was cancelled. An operation object does not clear a dependency until the value at the isFinished key path changes to true. Similarly, an operation queue does not dequeue an operation until the isFinished property contains the value true

isCancelled: The isCancelled key path inform clients that the cancellation of an operation was requested.

If you cancel an operation before its start() method is called, the start() method exits without starting the task.

OperationQueue:

An operation queue executes its queued Operation objects based on their priority and readiness in FIFO order. After being added to an operation queue, an operation remains in its queue until it is finished with its task. An operation can’t be removed directly from a queue after it has been added.

Operation queues retain operations until they’re finished, and queues themselves are retained until all operations are finished. Suspending an operation queue with operations that aren’t finished can result in a memory leak.

Operations within a queue are organized according to their readiness i.e. (isReady property returns true), priority level & dependencies, and are executed accordingly. If all of the queued operations have the same queuePriority and are ready to execute when they are put in the queue, they’re executed in the order in which they were submitted to the queue. Otherwise, the operation queue always executes the one with the highest priority relative to the other ready operations.

An operation object is not considered ready to execute until all of its dependent operations have finished executing.

Block Operation:

An operation that manages the concurrent execution of one or more blocks. BlockOperation class extends from Operation class. You can use this object to execute several blocks at once without having to create separate operation objects for each. When executing more than one block, the operation itself is considered finished only when all blocks have finished executing.

Blocks added to a block operation are dispatched with default priority to an appropriate work queue.

let queue = OperationQueue()

for i in 1...3 {

let operation = BlockOperation()

operation.addExecutionBlock {

if !operation.isCancelled {

print("###### Operation \(i) in progress ######")

let imageURL = URL(string: "https://upload.wikimedia.org/wikipedia/commons/0/07/Huge_ball_at_Vilnius_center.jpg")!

let _ = try! Data(contentsOf: imageURL)

OperationQueue.main.addOperation {

print("Image \(i) downloaded...")

}

}

}

operation.queuePriority = .high

queue.addOperation(operation)

}

queue.maxConcurrentOperationCount = 2

queue.waitUntilAllOperationsAreFinished()

queue.cancelAllOperations()

An operation can be execute on completion of the specified operation by using addDependency method.

let queue = OperationQueue()

for i in 1...3 {

let dependentOperation = BlockOperation()

dependentOperation.addExecutionBlock {

if !dependentOperation.isCancelled {

print("###### Operation \(i) in progress ######")

let imageURL = URL(string: "https://upload.wikimedia.org/wikipedia/commons/0/07/Huge_ball_at_Vilnius_center.jpg")!

let _ = try! Data(contentsOf: imageURL)

print("Image \(i) downloaded...")

}

}

dependentOperation.queuePriority = .high

let operation = BlockOperation {

print("Execute Operation \(i), Once dependent work is done")

}

operation.addDependency(dependentOperation)

queue.addOperation(operation)

queue.addOperation(dependentOperation)

}

queue.maxConcurrentOperationCount = 2

queue.waitUntilAllOperationsAreFinished()

queue.cancelAllOperations()

NSInvocationOperation:

In objective C we can create NSInvocationOperation while it’s not available in Swift.

Asynchronous Operation:

Asynchronous operations can be created by subclassing Operation class.

// Created by Vasily Ulianov on 09.02.17, updated in 2019.

// License: MIT

import Foundation

/// Subclass of `Operation` that adds support of asynchronous operations.

/// 1. Call `super.main()` when override `main` method.

/// 2. When operation is finished or cancelled set `state = .finished` or `finish()`

open class AsynchronousOperation: Operation {

public override var isAsynchronous: Bool {

return true

}

public override var isExecuting: Bool {

return state == .executing

}

public override var isFinished: Bool {

return state == .finished

}

public override func start() {

if self.isCancelled {

state = .finished

} else {

state = .ready

main()

}

}

open override func main() {

if self.isCancelled {

state = .finished

} else {

state = .executing

}

}

public func finish() {

state = .finished

}

// MARK: - State management

public enum State: String {

case ready = "Ready"

case executing = "Executing"

case finished = "Finished"

fileprivate var keyPath: String { return "is" + self.rawValue }

}

/// Thread-safe computed state value

public var state: State {

get {

stateQueue.sync {

return stateStore

}

}

set {

let oldValue = state

willChangeValue(forKey: state.keyPath)

willChangeValue(forKey: newValue.keyPath)

stateQueue.sync(flags: .barrier) {

stateStore = newValue

}

didChangeValue(forKey: state.keyPath)

didChangeValue(forKey: oldValue.keyPath)

}

}

private let stateQueue = DispatchQueue(label: "AsynchronousOperation State Queue", attributes: .concurrent)

/// Non thread-safe state storage, use only with locks

private var stateStore: State = .ready

}

Pass data between operations:

There are various approaches to pass data between operations. Mostly adopted one is adapter pattern. Here you create new BlockOperation to pass data. This article shows all approaches in a nice way.

https://marcosantadev.com/4-ways-pass-data-operations-swift/

Benefits of using Operations over Grand Central Dispatch:

Dependency: Operations provides API to add dependency between operations which enables us to execute tasks in a specific order. An operation is ready when every dependency has finished executing.

Observable: Operations and OperationQueue has a lot of properties that can be observed using KVO.

State of Operation: You can monitor the state of an operation or operation queue. ready , executing or finished

Control: You can Pause, Cancel and Resume an Operation.

Max Number of Concurrent Operation: You can specify the maximum number of queued operations that can run simultaneously. Serial operation queue can be created by maxConcurrentOperationCount to 1.

This is a free third party commenting service we are using for you, which needs you to sign in to post a comment, but the good bit is you can stay anonymous while commenting.